Petney CakePHP Big Data Handler

PHP, CakePHP, MYSQL, Database optimization

Petney CakePHP Big Data Handler

PHP, CakePHP, MYSQL, Database optimization

Group Project

Date: 1st Feb 2017 - Present

PHP, MYSQL, Big Data

Introduction

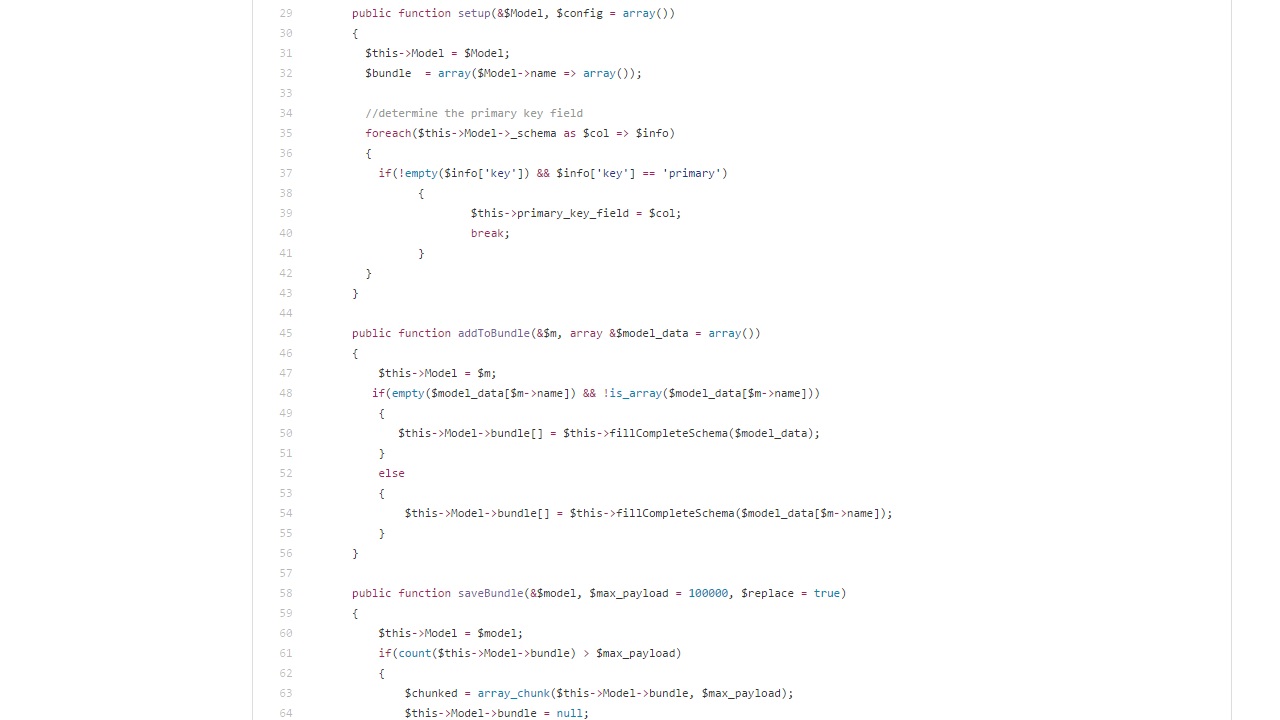

I've recently been using CakePHP, while working with a team of developers on multiple applications. However, we were running into efficiency issues when working with large amounts of data. It’s not uncommon for us to insert (or update) hundreds of thousands of rows with a single process. Additionally, we needed an efficient way to work with those hundreds of thousands of pieces of data. So, after some investigation I narrowed our efficiency problem to CakePHP sending data to the database, one row at a time. This works great for small applications, but will really slows things down once large amounts of data come into play. I fixed this by creating this behavior that allows a model to have a “bundle” of objects. This bundle is stored in memory. Upon saving the bundle, all of the model objects are inserted into the database as a bulk insert, 100,000 items per insert by default. Additionally, this behavior allows CakePHP find results to be returned in the form of a hashed array. The user can specify a ‘key’, which will serve as the key of the returned associative array.

Usage

First, Have your model use the behavior:

Next, lets insert 100,000 rows with a single call:

To fetch a hashed result set from the database, call the fetchHashedResult() function:

The previous function call returns an associative array, where each object’s key is + . If you would like to md5 the key, can add the ‘useHash’ => true value to the parameter array.

Entire script:

Notable Accomplishments

- Developed a useful big data handler in CakePHP

- Learned about CakePHP data handling

- Streamlined the creation of CakePHP applications